Contents

Real-time AI translation is already here, and today the market offers a wide range of products with this functionality. But our client didn’t enter this space once AI became popular—they were among the first to apply AI to translation.

The idea of creating a real-time AI translation app came up during international calls with partners speaking multiple languages. Our client saw an opportunity to make conversations more efficient, with fewer missed nuances, by using AI.

That’s how the project began, and it continued to develop successfully, but until a particular moment—when growth itself became a challenge due to infrastructure limitations. The client started to face downtime during updates, rising operational costs, security concerns, and many other issues.

The decision was made—to rethink their entire technical foundation, and this is when the team came to us. What results did we achieve and how exactly did we manage to do it? Let’s find out.

The “Before” State

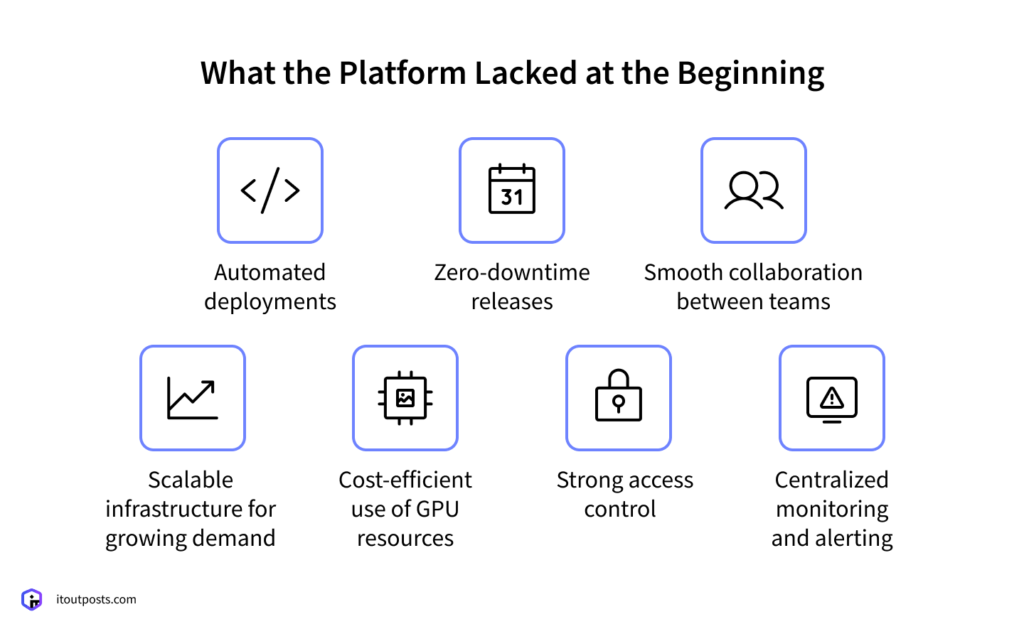

First, it’s necessary to look at the initial state of the infrastructure to understand what limited growth and what specific issues the client faced during development, infrastructure management, and feature releases.

At the beginning, our client ran their services on basic virtual machines hosted on Hetzner, with no CI/CD pipeline. While this setup was sufficient in the early stages of the product when the client needed to test the idea in practice, over time, the team began to notice that the release process wasn’t as convenient as it needed to be as the platform continued to evolve. Here’s what the release process looked like:

- Fully manual and slow (since there was no CI/CD pipeline in place, each release involved manually placing updated code on production servers and often restarting applications)

- Error-prone (since releases involved many manual steps, this increased the chance of human error)

- Disruptive, often causing downtime during deployments (the service often had to be stopped while updates were applied, which made it unavailable to users during this period)

In addition, as the platform expanded and new services were introduced, this increased the number of engineers involved. Multiple outsourced development teams worked on the project, and each was responsible for different services, such as text translation and lip-syncing.

But the initial setup was built in such a way that there was minimal isolation between services and, thus, teams. This created more chances for engineers to accidentally interfere with each other’s work.

Plus, this also raised security concerns, since multiple teams had wider access to the system than they actually needed. This makes the system harder to protect, even from unintentional mistakes.

Finally, many of the platform’s AI services required compute nodes equipped with GPUs for video processing. And these resources can be much more expensive than they should be without the right cost optimization strategy in place.

All of the difficulties mentioned above were symptoms of a foundation that hadn’t been designed for the demands of a fast-growing, AI-driven product.

The development and deployment process turned out to be inefficient for the project’s new goals, such as effective (and cost-effective) experimentation with AI models and frequent feature releases with minimal downtime. Security risks were also present. At the same time, costs started to become disproportionately high compared to the value the initial setup could bring to the business.

That’s when it became clear a different approach was needed to support further scaling and growth.

The Turning Point: What Had to Change

During our initial consultations, the client defined a set of priorities for the next stage of growth:

- Faster releases without service interruptions

- Scalable infrastructure that could handle high demand

- Cost-efficient GPU utilization

- Stronger security controls across multiple development teams

- Better observability to understand how the systems perform and the expenses of running them

Designing New Infra

After evaluating all possible options, we came up with the most feasible one, in terms of both business and technical needs. Together with the client, we decided to rebuild the platform using Kubernetes on Google Cloud.

Migration to Kubernetes on Google Cloud

With Terraform, the entire Google Cloud environment was built from the ground up, including Kubernetes clusters and node pools optimized for different workloads, such as GPU-intensive video processing and standard application services. Thanks to Kubernetes, services can now automatically scale up when demand increases and scale down when it drops.

Our team also rethought the release process. New versions of services can now be rolled out gradually, going through health checks. This allows the client’s teams to ensure everything is working as expected before the new version starts to handle all user requests. If an issue appears, the system can pause or roll back the update without affecting users.

Getting Multiple Teams to Work as One

When many teams work on the same product, the project’s success depends on more than just technology. Each team has its own habits and assumptions. Without common rules, teams may work differently, which may lead to duplicated efforts or mistakes, even when everyone is trying to do the right thing.

This is exactly where DevOps shows its real value, as it’s a set of practices designed to help people work together. DevOps doesn’t exist for automation solely. So, to make sure all our client’s teams are able to work as a single mechanism, we needed to ensure everyone follows the same approach to development and releases.

So, we introduced the “kick-off file.” Now, every new developer joining the project receives a standardized checklist with answers to the questions that new team members otherwise would find through trial and error. The checklist covers the following:

- Application ports

- Git repositories

- Sensitive data handling

- Logging locations and formats

- Compute resource requirements (CPU/RAM)

- Permission policies

We also ran on-demand knowledge-sharing sessions—conversations about Kubernetes basics, cloud-native best practices, and more. Developers could ask questions and align their workflows with their colleagues.

Building Boundaries Between Services

To prevent services from interfering with each other, we separated resources. Each application was given its own isolated environment, with dedicated limits for compute and memory usage. This particularly ensures that heavy workloads, such as video processing, won’t consume resources needed by other services.

To avoid sharing sensitive credentials, we used Google’s Workload Identity. This means each service is automatically linked to its own Google service account. For developers, this means there’s no need to request, share, rotate, or worry about leaked credentials—access just works, based on the service they’re working with.

Giving Video Processing Workload the Resources It Needs

Video processing is one of the most demanding parts of our client’s platform, and this is the part where the infrastructure is under the most pressure. Video processing workloads need GPUs, and they need them to be available exactly when the service asks for them.

So, as we mentioned earlier, alongside the standard compute nodes, we created a dedicated Kubernetes node pool specifically for video processing. This pool is made up of virtual machine instances designed for GPU-intensive tasks and preconfigured with the required video drivers and supporting libraries.

This way, video-processing services don’t have to compete with lighter services for resources and run reliably.

Making the Platform Observable and Predictable

As the project expands in both functionality and user base, the need for strong observability becomes stronger.

To make the platform understandable at a glance, we set up a full monitoring and alerting stack. Every critical service now reports metrics, so the client’s teams can now receive a picture of how the system behaves under different loads in real-time. Historical data is visualized through custom dashboards, which makes it easy to spot trends and notice early warning signs.

Here’s what the monitoring stack exactly consists of:

- Prometheus for metrics storage

- Grafana for visual analytics

- Prometheus Alert Manager for threshold-based notifications

Whenever metrics cross defined thresholds, alerts are automatically sent to a dedicated Slack channel. Engineers can respond proactively, without having to constantly monitor dashboards.

Controlling the Cloud Bill

Finally, last but not least, the cost question. And not least because any tech decision we take on the project is made with an eye on how it affects our client’s bottom line.

AI workloads have a habit of quietly driving cloud bills through the roof. For the client’s platform, this was especially true because many core services depend on GPUs.

To keep cloud costs under control, we suggested committing to a fixed amount of cloud resources for three years instead of paying for everything on demand. This lowers prices significantly, while still providing the performance the platform needs.

Results

With the new infrastructure in place, the client can now fully focus on their goals. They’re able to safely and cost-effectively experiment with AI, release updates faster with minimal downtime, scale the platform to meet high demand, and much more. Just as importantly, feedback from the development teams has been positive. Their work has become more structured and predictable, and more enjoyable.

Let’s Build the Right Foundation for Your AI Product

This project was rewarding for us as well. Together with the client, we achieved the goals that were set from the start, delivering a solution that balances technical requirements with real business needs and helping bring such a complex and impactful product to life.

If you’re building or scaling an AI-driven product of your own, contact us! We at IT Outposts can help you design an infrastructure that supports experimentation, performance, and sustainable growth, so your technology can reach its full potential.

I am an IT professional with over 10 years of experience. My career trajectory is closely tied to strategic business development, sales expansion, and the structuring of marketing strategies.

Throughout my journey, I have successfully executed and applied numerous strategic approaches that have driven business growth and fortified competitive positions. An integral part of my experience lies in effective business process management, which, in turn, facilitated the adept coordination of cross-functional teams and the attainment of remarkable outcomes.

I take pride in my contributions to the IT sector’s advancement and look forward to exchanging experiences and ideas with professionals who share my passion for innovation and success.