Contents

Your code just passed all tests and deployed seamlessly to production. Zero downtime, zero stress.

This isn’t luck. It’s the result of a well-crafted CI/CD pipeline that catches issues early and lets you ship code with confidence. Most development teams know CI/CD is important, but not all get it right. Many end up with slow pipelines, unreliable tests, and deployments that still make everyone nervous.

Every Decision in Your Pipeline Should Optimize for These Core Outcomes

When we talk about CI/CD success, three metrics prove whether your investment in automation is paying off:

- Zero downtime deployments. Your users shouldn’t know when you’re deploying. When downtime does occur, it’s a signal that something in your deployment strategy needs fixing.

- Speed from commit to production. The faster your pipeline, the faster you can respond to bugs, ship features, and iterate on feedback. Slow pipelines negatively affect your team’s rhythm and make developers jump between different tasks while waiting.

- Code quality assurance. Your pipeline is the last line of defense before code reaches users. This means comprehensive testing, security scanning, code quality checks, and performance validation. By the time the code deploys, you should have complete confidence that it won’t break anything.

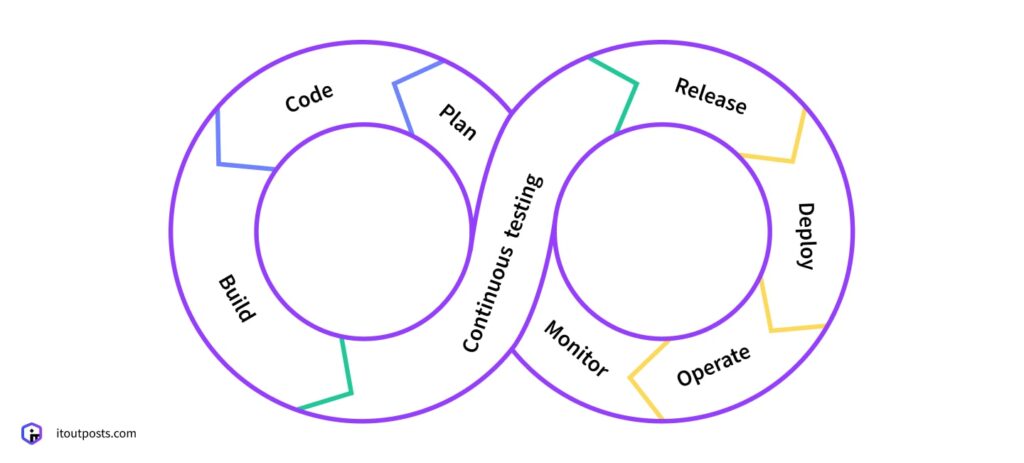

What a Basic CI/CD Pipeline Looks Like

Before we share advanced CI/CD best practices, it’s necessary to proceed from the foundation. Every sophisticated pipeline starts with mastering the basics.

When it comes to a basic CI/CD pipeline, you have a dockerized application with a Dockerfile. The CI part builds your Docker image and pushes it to a registry where it gets stored. Then the CD part handles delivery — you pull that image and deploy it to your environment, whether staging or production.

Basic CI/CD can also be extended a bit. Some branches deploy automatically, others require manual approval or triggers. You might have different deployment strategies for different environments. This still counts as basic because the fundamentals remain the same: build, push, pull, deploy.

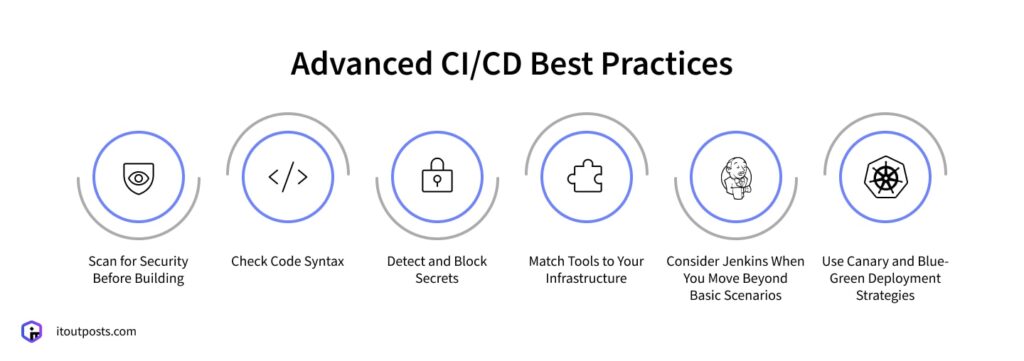

Advanced CI/CD Best Practices

A correctly built basic CI/CD pipeline already works well. But you can always strive further toward perfection. Here are the advanced CI/CD best practices we follow at IT Outposts.

Scan for Security Before Building

Beyond the simple build process, you can add code verification checks. For example, before the actual build, you run the repository through vulnerability scanning to check the code you’re about to build.

Say someone is using an outdated version of some framework that has known vulnerabilities. These security scans will detect and flag this.

The scanning happens automatically as part of your pipeline, catching security issues before they get baked into your Docker images. When vulnerabilities are found, developers get immediate feedback about what needs to be updated or fixed.

This approach shifts security left in your development process.

Check Code Syntax

Next comes syntax scanning. This checks for proper indentation, punctuation, and characters that shouldn’t be used in your code. The verification highlights when your code needs rework because it’s incorrect.

When the scanner finds problems, it points out exactly what needs fixing, whether it’s inconsistent spacing, missing semicolons, or improper variable naming.

Detect and Block Secrets

Then we additionally configure secret detection. This scans your code and identifies passwords, API keys, and secrets that shouldn’t be hard-coded. Secret detection finds these and fails the pipeline — it won’t reach the build stage until you remove the secrets from your code.

This practice protects your application from one of the most common security mistakes. Hard-coded secrets in images can be extracted and misused, but secret detection ensures they never make it that far in your pipeline.

Match Tools to Your Infrastructure

While tools alone don’t define release success (strategy does that), the choice of tools still impacts your pipeline’s effectiveness and maintainability.

The key is matching your tools to your infrastructure and team needs.

There are many tools we can use: Jenkins, GitLab CI/CD, GitHub Actions, Circle CI, Bitbucket. All of these integrate and work well. We can also use native cloud solutions like AWS CodeBuild and CodeDeploy.

If you’re using vendor-dependent services like ECS, it’s simpler to use CodeDeploy since it’s designed specifically for AWS environments. If you’re running Kubernetes, then Flux CD and Argo CD make more sense because they’re built specifically for Kubernetes deployments.

Consider Jenkins When You Move Beyond Basic Scenarios

Solutions like GitLab CI/CD or GitHub Actions cover most CI/CD needs without requiring additional tools.

These tools provide sufficient functionality for straightforward deployment workflows, automated testing, and basic security checks. They integrate with your repository, handle common deployment patterns, and offer good documentation and community support.

However, you might face deployment tasks that aren’t quite trivial — something more custom. When you go beyond the boundaries of GitHub/GitLab environments.

For example, when you need to manage external services through APIs. Or when you need to automate something you normally do manually, clicking through interfaces, to avoid doing this manually every time.

Or say you built an image and want to deploy it, but first, you need to clear the cache. Everything runs, the cache gets cleared, and then deployment happens.

For all such custom work, complex scenarios, Jenkins is used. It’s a powerful tool where you can do anything. Jenkins has a much larger toolkit to accomplish these tasks.

However, Jenkins can be challenging to understand, and you need a separate server to run it. You have to set up a server through which you’ll launch everything and work. This adds infrastructure complexity that simpler tools avoid.

Use Canary and Blue-Green Deployment Strategies

When you deploy new code, you want to minimize risk. Two proven strategies help you test changes safely before rolling them out to everyone.

Canary deployments work in the following way: you send a small “canary” of your new code to a tiny group of users first. If something breaks, only a few people are affected while you fix it. If everything works fine, you gradually increase the percentage until everyone gets the new version.

Blue-green deployments run two identical environments side by side. Your current version runs in the “blue” environment, serving all users. You deploy the new version to the “green” environment and test it thoroughly. When you’re confident it works, you switch all traffic from blue to green instantly.

Ready to Ship Code with Confidence?

These best practices usually vary based on the specifics of your project. Your tech stack and deployment frequency significantly influence which practices make the most sense.

We’ve tested each of these practices in real-world settings. They consistently help our clients solve problems like slow version releases, manual deployment errors, complex workflows, and a lack of integrated testing.

If you’re struggling with any of these issues, we can create an excellent pipeline for you.

We can also optimize many other DevOps aspects to accelerate your entire software delivery process.

Our unique offering — pre-built AMIX infrastructure — lets you upgrade your entire software delivery process. It covers infrastructure as code, multi-environment setups, Kubernetes, all necessary security measures, advanced CI/CD pipelines, monitoring, and much more.

Contact us to find out more about AMIX, and we’ll gladly show you how it can transform your deployment process, making it less stressful and more seamless.

I am an IT professional with over 10 years of experience. My career trajectory is closely tied to strategic business development, sales expansion, and the structuring of marketing strategies.

Throughout my journey, I have successfully executed and applied numerous strategic approaches that have driven business growth and fortified competitive positions. An integral part of my experience lies in effective business process management, which, in turn, facilitated the adept coordination of cross-functional teams and the attainment of remarkable outcomes.

I take pride in my contributions to the IT sector’s advancement and look forward to exchanging experiences and ideas with professionals who share my passion for innovation and success.