Contents

For any project, changing the architecture of the server is a major step. Most of our IT Outposts clients take advantage of our Kubernetes services to achieve this goal. Every time, the experience has been unique.

Kubernetes migration support is a popular choice because it offers increased stability, unification of the environment, and fast autoscaling.

Microserver architectures are best suited for Kubernetes. Cluster entities have to be distinct to be effective. This allows you to:

- Limiting services precisely

- Only establishing necessary connections

- Pick an entity type unique to each service (Deployment, ReplicationController, DaemonSet, etc.)

It’d be great if you could imagine the real purpose of your Docker Swarn to Kubernetes migration and think about it before we move forward with explaining the process.

- Why do you plan to migrate to Kubernetes?

- Will you have the resources to modify your application’s logic if it needs to be changed? Is your application container-native by design?

- In what ways do you expect Kubernetes to benefit you?

A whole mountain of rework lies just below the tip of the iceberg, so make sure your team is prepared for it and understands what is happening. Alternatively, even a smooth Kubernetes migration will, in your case, have no practical effect on your application’s design or business logic.

In this article, we explain some of the reasons you should migrate Docker container

to Kubernetes, along with tips on deploying your applications using other tools, as well as how to facilitate Docker to Kubernetes migration using Kompose.

Read also: Microservices Decomposition Strategy in 2025

What Is Kubernetes?

Kubernetes (also known as K8s) is an open-source platform for managing containerized applications and services. In simpler terms, Kubernetes helps you deploy and run applications made up of multiple, interconnected containerized parts.

A container is a standardized unit that allows software to run reliably from one environment to another. Containers group code along with everything needed so apps reliably work the same as they move between different computer systems. Docker is the most common container platform that Kubernetes works with.

Kubernetes orchestrates groups of connected servers so they function as one highly accessible pooled resource. This cluster can be on the same machine, multiple machines, or even across data centers and cloud providers. Kubernetes automatically handles distributing and scheduling containers across cluster nodes.

If that node goes down or needs to be maintained, Kubernetes reschedules the containers on the remaining nodes. It also scales containerized applications, including replication and load-balancing containers for availability. This removes many operational complexities when running containerized applications in production.

The main reason Kubernetes is popular is because it allows you to run containerized applications at scale in production. Kubernetes provides mechanisms for deployment, maintaining state, scaling, and observability for these containerized applications. Many major tech companies now run Kubernetes in production, including Google, Microsoft, IBM, and Red Hat.

Kubernetes consulting can offer personalized guidance and recommendations based on your specific goals and application requirements.

What Are the Advantages of Docker and Kubernetes?

Docker and Kubernetes are handy technologies that are changing how we build and run applications these days. Docker allows bundling applications into containers. Some big advantages of Docker are:

- Portability. Docker containers can run anywhere that supports Docker — your laptop, test environment, production servers, etc. This makes deployment much easier.

- Efficient use of resources. Containers leverage the host’s operating system and only run certain processes separately. You can use resources more efficiently than on virtual machines.

- Agile development. The lightweight containers mean developers can quickly build, test, and deploy applications right off their laptops. You can iterate rapidly as you create applications.

- Isolation. Each container gets its own separate computing space. This helps you avoid conflicts between containers and provides firm boundaries for security.

While Docker masters containerization, Kubernetes brings wide-scale orchestration and management of containers. The major advantages of Kubernetes include the following:

- Automatic scaling. Kubernetes can grow or shrink containerized applications based on real-time demand. It spins up additional instances gracefully to handle traffic spikes in your application.

- Self-healing. Kubernetes actively restarts and replaces failed containers. This boosts the overall resilience of applications running on it.

- Service discovery and load balancing. It groups containers into services and spreads incoming network traffic across healthy containers automatically. This greatly simplifies management.

- Controlled rollouts. Application deployments can roll out gradually in a controlled, predictable manner. This way, you can significantly reduce deployment risks.

Docker offers an excellent platform for running containerized applications anywhere with simplicity. However, Kubernetes tackles application management at scale. It takes Docker containers and coordinates entire applications made up of different containerized pieces.

For these reasons, Docker Swarm to Kubernetes migration is recommended. This provides an adaptable container platform designed for growth and changing needs over time, allowing you to ramp up resources with less effort as complexity and traffic volumes grow. Let’s explore the reasons to migrate from Docker Swarm to Kubernetes in more detail.

Why Move from Docker Compose to Kubernetes

The following factors explain why you should migrate Docker Compose to Kubernetes.

Single-cluster limitation of Compose

Docker Compose containers run on a single host. When multiple hosts or cloud providers are used to run an application workload, this presents a network communication challenge. Using Kubernetes, you can manage multiple clusters and clouds more easily.

Single point of failure in Compose

Docker Compose-based applications require that the server running the application be kept running for them to continue working. This leads to a single point of failure on the server running Compose. Contrary to this, Kubernetes runs typically in a highly available (HA) state with multiple servers deploying and maintaining the applications. The nodes are also scaled based on resource utilization in Kubernetes.

The extensibility of Kubernetes

Platforms like Kubernetes are highly extensible, which is why they are popular with developers. Pods, Deployments, ConfigMaps, Secrets, and Jobs are some native resource definitions. Clustered applications run using each of them for different purposes. The Kubernetes API server provides the ability to use CustomResourceDefinition to add custom resources.

Using Kubernetes, software teams can create their operators and controllers. Control loops are specific processes that run within a Kubernetes cluster following the control loop pattern.

These are used to keep the cluster in the desired state and regulate its state. By talking to the Kubernetes API, users can create custom controllers and operators that take care of CustomResourceDefinitions.

Great ecosystem and open source support of Kubernetes

Kubernetes is a powerful platform that continues to grow rapidly among enterprises. Over the past two years, it has ranked among the most popular platforms and the most desired among software developers. It stands out among container orchestration and management tools.

Cloud-native container orchestration has become synonymous with Kubernetes. In addition to having more than 1,800 contributors, there are more than 500 meetups worldwide, and more than 42,000 users are members of the #kubernetes-dev channel on Slack. The CNCF Cloud Native Landscape also demonstrates the robust ecosystem that Kubernetes has. By using these cloud-native software tools, Kubernetes can run more efficiently and the complexity of the system can be reduced.

Application Decomposing and Re-Inventing It as a Kubernetes-Native App

Now, we shift from theory to practice. Let’s look at how you can go about using the answers you receive from the “goals” questions listed above.

Planning and visualization of your current architecture

When you migrate from Docker Compose to Kubernetes, you can estimate migration time and resource scope based on documentation, visualization tools, and broad planning. Draw a schematic of the parts of your app and the connections between them. Using a deployment chart, hexagonal perspective, or even a simple data flow chart will serve the same purpose. As a result of this step, you will have a full map of modules and their connections. With that, you would be able to understand what is migrating to Kubernetes.

Application architecture must be rethought

Although you may feel enthusiastic at this stage — “Let’s rewrite everything!” – you have to maintain your cool and build your modules’ migration order from the simplest to the most difficult. By training your team in this way, you’ll be able to prepare them for even the most challenging projects. Alternatively, try organizing your plan using another method: choose the most important modules, such as those that handle business logic. Modules can be classified as secondary when the core of the app is migrated to K8s and then worked on afterward

Your job here will be to solve the following tasks:

- Determine the best method for logging in to your app

- Select the way your sessions will be stored (e.g., in shared memory)

- If you plan on developing a K8s app, think about how you will handle file storage

- Test, troubleshoot, and reflect on the new challenges you face with your application

There might be occasions when some of your stages shrink or expand in length, but that’s fine. It might be necessary for you to hire additional staff and increase the expertise of your team. Docker Swarm to Kubernetes migration is unique to each organization.

Here is a brief description of what follows.

- Containerization stage. Docker is likely the tool of choice for your migration to be successful if your application workloads are not currently running in containers. The obvious choice is Docker, considering it’s intuitive, supported, and widely used. However, it isn’t the only machine to use for containerization. Docker can be used to create images supporting your applications and their dependencies. Ideally, this should be achieved using an automated continuous integration pipeline that includes pushing the versions to a Docker registry (which can be private).

By now, you should be ready to begin using Kubernetes. We won’t go into more detail about this step since this post is focused on Kubernetes migration instead of Docker fundamentals. Getting familiar with Docker can be done using a variety of great resources.

- Choose Kubernetes objects for each module based on your app module’s schematic. There are several types and options for the components of your app, so normally, this stage goes smoothly. Following this, you need to create mapped Kubernetes objects using YAML files.

- Adapting databases. This is commonly done by simply connecting a new Kubernetes-based application to it.

After you have launched a Docker container, all you need to do is make the executive decision to containerize your entire app, including your database.

The adoption of Kubernetes is now clearer to you. We’ll dig deeper into the topic with a discussion on the technical differences between how to migrate Docker Compose to Kubernetes, application migration best practices, and Kubernetes use cases.

How to Migrate Docker Container to Kubernetes: Step-by-Step Process

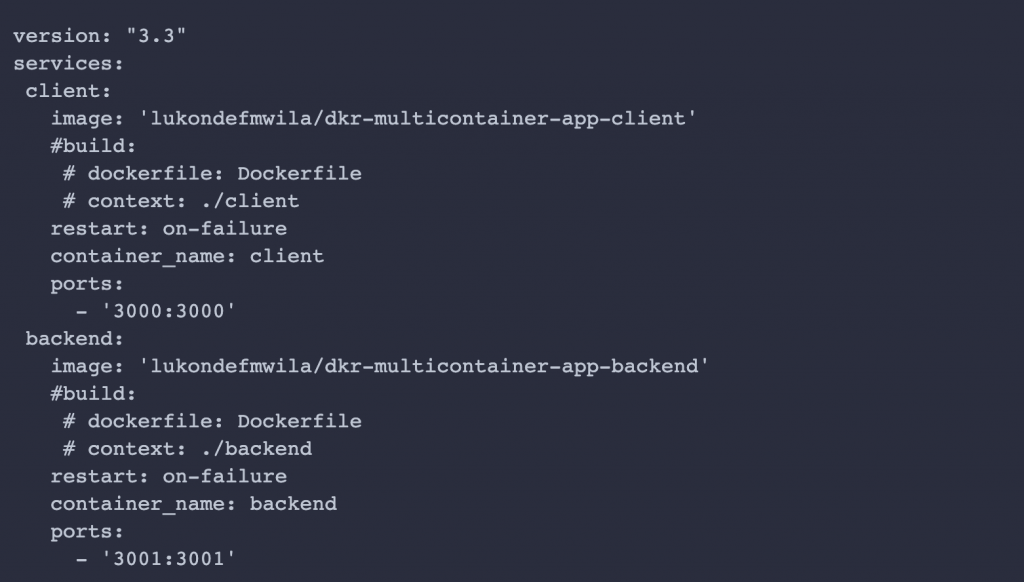

By the end of this section, you will have converted a simple, two-tier containerized application that was initially for use with Docker Compose to a Kubernetes environment. React.js makes up the frontend while Node.js makes up the backend. You can find the source code here.

Docker Compose configuration

Compose orchestrates multi-container applications using a single configuration file. Using this file, you can specify various details about the types of containers you wish to run, including build configurations, restart policies, volume settings, and networking configurations. Please find below the docker-compose file of the application that you will be translating.

Docker Hub repository images are to be used to build clients and backend containers in this file. Modifying the application source code and rebuilding the images can be done by commenting out lines related to images and uncommenting the build configurations of the respective services.

Run the following command to test the application:

Docker Compose file changing

By converting the configuration file as it is, you would not achieve the desired result. Pods and services are generated by Kompose for each service. As a result, the services will only be able to flow the traffic within the cluster (cluster IP service) to the Pods. You will need to add a certain label to the services in Docker Compose so that the application can be exposed to external traffic. A Kubernetes service that fronts the Pods will be specified by this label.

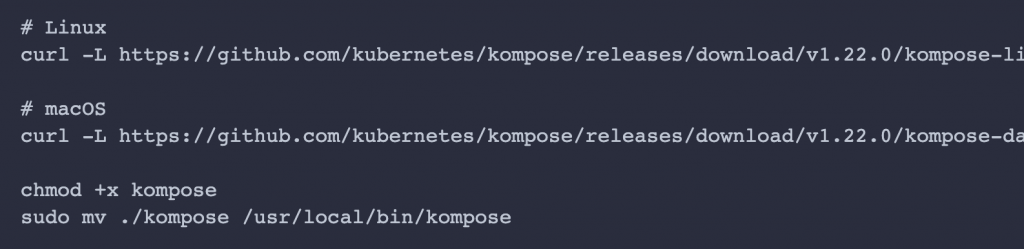

Kompose installation

Below are instructions on how to install Kompose, which you can also find on the official website:

Building Kubernetes Manifests with Kompose

Next, run the commands below at the same level as docker-compose.yaml to create your Kubernetes manifest file.

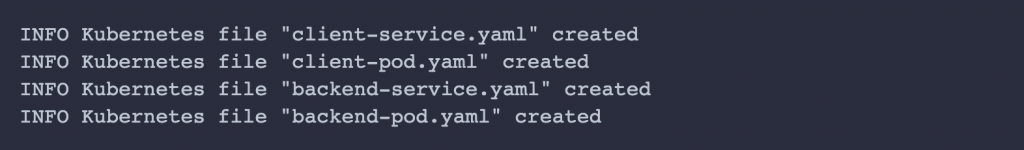

The result will be as follows:

Deploying resources to Kubernetes Cluster

Finally, you can declare your desired state in your cluster by using kubectl apply and specifying all files in the composition created by Kompose.

Other Options to Consider

When deploying to Kubernetes, you can also use other tools. The following are a few to consider.

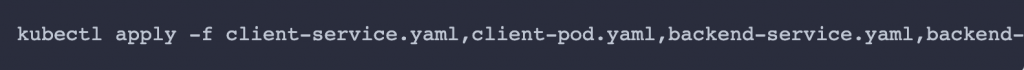

DevSpace

Kubernetes-specific command-line interface (CLI) tool called DevSpace is open-source. It uses the same kube-context that you use for kubectl or Helm. Development can take place directly inside of Kubernetes clusters, reducing the chances that configuration drift will occur when deploying an application to a production Kubernetes environment. The DevSpace platform supports platforms such as Rancher, Amazon EKS, Google Kubernetes Engine (GKE) on Google Cloud Platform (GCP), and other public clouds. You can find instructions on how to install DevSpace here.

Skaffold

Google created Skaffold as a CLI tool that manages developer workflows for building, pushing, and deploying their applications. While Skaffold continually deploys your application to your local Kubernetes cluster or remote Kubernetes cluster, you can focus on the ongoing changes to your application. Follow the steps below to install Skaffold. With the command skaffold init, a project can be configured to deploy to Kubernetes.

Conclusion

Docker to Kubernetes migration is a big step forward for organizations looking to scale and streamline containerized applications. Adopting Kubernetes unlocks powerful automation, resilience, and ease of operation that you just can’t get in pure Docker environments.

But container migrations also introduce short-term headaches. Careful upfront planning is vital when you migrate Docker Swarm to Kubernetes. Take time to train internal teams properly — ensure they have practical Kubernetes skills and an open mindset around new methods.

The future’s unwritten, but tools like Kubernetes help write it with increasing sophistication and care.

IT Outposts is available for any questions you might have. For businesses of all sizes, we provide Kubernetes Deployment Services and Infrastructure Migration Services.

FAQ

Should I still use Docker with Kubernetes?

The best practice these days is to fully migrate from Docker to Kubernetes itself rather than try to integrate the two systems. Kubernetes has matured into a complete container orchestration platform that subsumes Docker’s capabilities.

Trying to connect Docker and Kubernetes adds operational complexity without much benefit. The overhead of two container systems can create problems with redundancy and consistency. Kubernetes is designed to be a single integrated platform for the entire container lifecycle — from building to deployment to monitoring. Migrating to Kubernetes fully simplifies operations, removes overhead, and opens the door to advanced capabilities like predictive autoscaling, canary deployments, GitOps integration, and much more.

What is the advantage of Kubernetes over Docker Compose?

Docker Compose is useful for coordinating containers on a single machine or small project. But Kubernetes offers far more robust orchestration capabilities for container infrastructure across entire data centers and cloud providers.

Kubernetes automates container deployment, scaling, networking, load balancing, logging, monitoring, and more. It’s ideal for complex microservice applications. The declarative approach also streamlines infrastructure configuration. For large container environments, Kubernetes provides much more advanced, resilient orchestration than Compose.

What can I use instead of Docker in Kubernetes?

While Docker is still the most common container runtime used with Kubernetes, other runtimes like containerd and CRI-O can be used as drop-in replacements. These alternative runtimes allow running container images in Kubernetes without going through the Docker daemon.

However, fully migrating off Docker to another runtime generally isn’t advised just for the sake of avoiding Docker. The runtime sits below the Kubernetes layer, so swapping it out doesn’t change higher-level Kubernetes capabilities or workflows much.

For most users, Docker provides the most seamless experience for building and running container images within a Kubernetes environment. The integration and tooling around the Docker-Kubernetes workflow are more extensive.

I am an IT professional with over 10 years of experience. My career trajectory is closely tied to strategic business development, sales expansion, and the structuring of marketing strategies.

Throughout my journey, I have successfully executed and applied numerous strategic approaches that have driven business growth and fortified competitive positions. An integral part of my experience lies in effective business process management, which, in turn, facilitated the adept coordination of cross-functional teams and the attainment of remarkable outcomes.

I take pride in my contributions to the IT sector’s advancement and look forward to exchanging experiences and ideas with professionals who share my passion for innovation and success.