Contents

Just as we were getting comfortable with the term “DevOps,” a new buzzword came along — “MLOps.”

The emergence of this term wasn’t surprising, considering how many AI-driven solutions are launching every single day. Check out any big tech products, like Salesforce, Slack, and Dropbox, and find out that they’re all showcasing AI features.

The truth is, working with machine learning (ML) models presents challenges that traditional DevOps practices simply weren’t made for.

In the DevOps vs. MLOps debate, you might think the latter is just another marketing hook, but we’re here to explain how it differs from DevOps and the role of MLOps in keeping AI systems running smoothly.

Let’s start with the definition of DevOps since MLOps is essentially a part of it but designed for ML systems.

DevOps Refresher

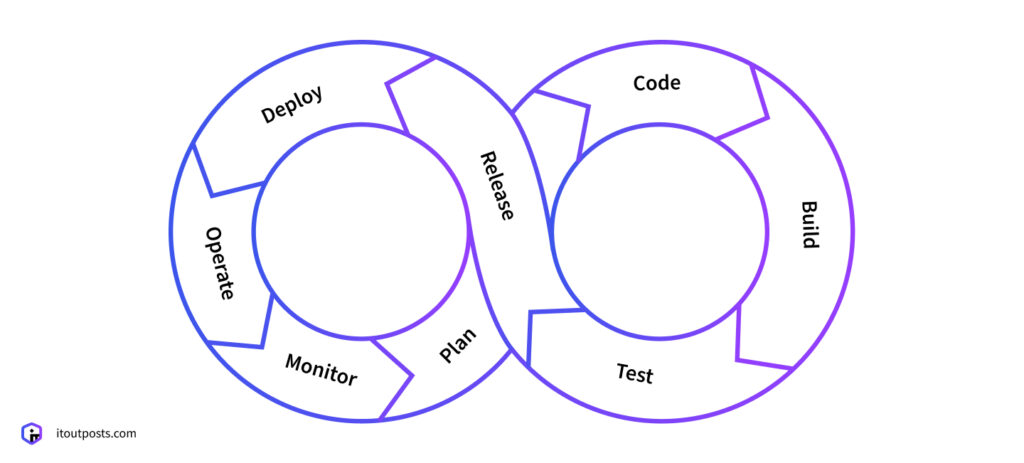

How does DevOps work? It combines practices and tools that help teams deliver better software at a quicker pace. It bridges the traditional gap between development teams (who write code) and operations teams (who run and maintain systems).

Before the DevOps era, software development felt a lot like a relay race. Developers would write the code and then hand it off to the operations teams to make it work.

This handoff usually led to some issues. Developers often had no clue how their code would perform in the field conditions, while ops teams found it difficult to run code they weren’t involved in creating.

Learn more about the importance of Devops as a service for StartUps in our recent article.

DevOps united these teams by introducing the following practices into earlier manual and, therefore, lengthier workflows:

- Continuous integration & continuous deployment (CI/CD)

- Infrastructure as code (IaC), or centralized version control

- Container deployment

- Cloud infrastructure

- Site reliability engineering (SRE)

- DevSecOps

Now, both the dev and ops departments are responsible for how the software runs, and they tackle any challenges as a group.

The results are pretty clear. Teams that welcome DevOps can update their software way quicker than they used to. Features that took ages to launch now get rolled out in just a few days or even hours. Systems operate better since problems are spotted early on, before they affect end users.

However, ML introduced some new challenges that regular DevOps just couldn’t address. This is where MLOps enters the picture, as we’ll see in the next section.

What Is MLOps?

Machine learning has long remained a scientific experiment confined to laboratories. The first ML models go all the way back to the 1950s! Today, as ML shifts from being just experiments to actual commercial products that run on desktops, phones, and smartwatches, it needs a solid pipeline to actually reach users.

MLOps has become a critical focus for businesses that have begun implementing AI products. It significantly reduces the barriers to turning experimental ML models into production-ready solutions.

But the reality at the time of writing is that only 47% of business ML models make it to production due to the lack of MLOps foundation. Thus, now is an ideal moment to adopt the MLOps mindset and gain a significant competitive edge in the market.

The Team Behind AI Development

Though companies may involve different AI specialists and give them various titles, here are the key experts who collaborate on ML projects, according to IT Outposts:

- Data scientists collect and analyze available data, design algorithms or pick from existing ones, run experiments to find the best solutions for real-world problems, and train ML models.

- Data/ML engineers transform research models into production code.

- MLOps engineers are the orchestrators who automate the entire ML lifecycle, from training to deployment. They establish infrastructure for data scientists and ML engineers to simplify their work and improve the quality of outcomes.

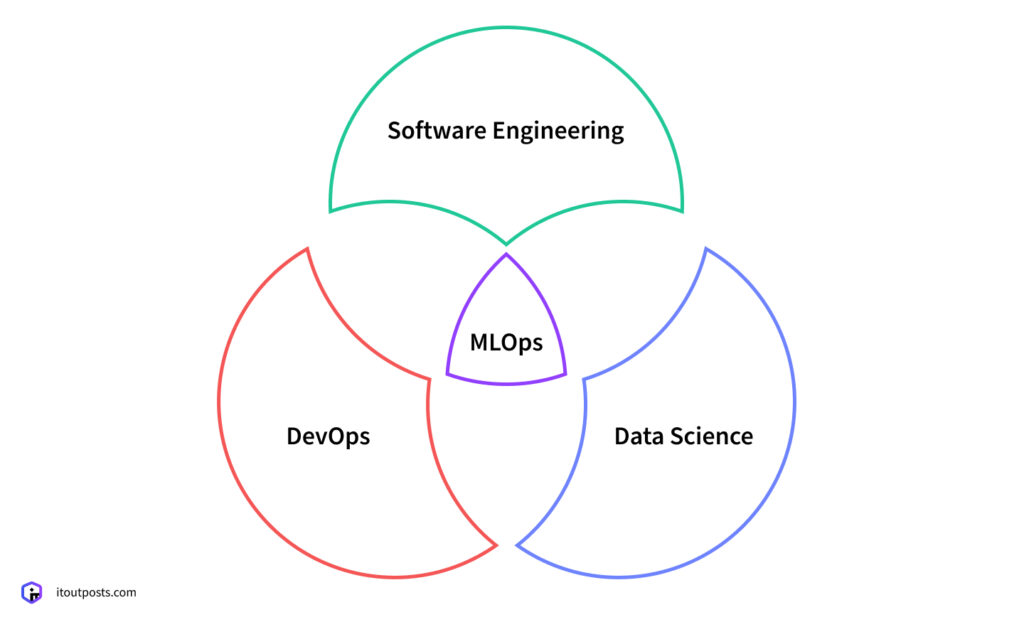

Since MLOps brings together fields like data science, software engineering, and DevOps, your MLOps engineers need to be knowledgeable in each of these areas. Besides, they must have hands-on experience with MLOps tools.

Why ML Projects Need Special Care

To draw a parallel between DevOps and MLOps, we’ll first compare the projects they serve. What does set ML projects apart from conventional ones so that they require a special approach?

A Different Kind of Pipeline

What is an ideal DevOps cycle? You write code, test it, and deploy it. Here’s how a DevOps pipeline looks like:

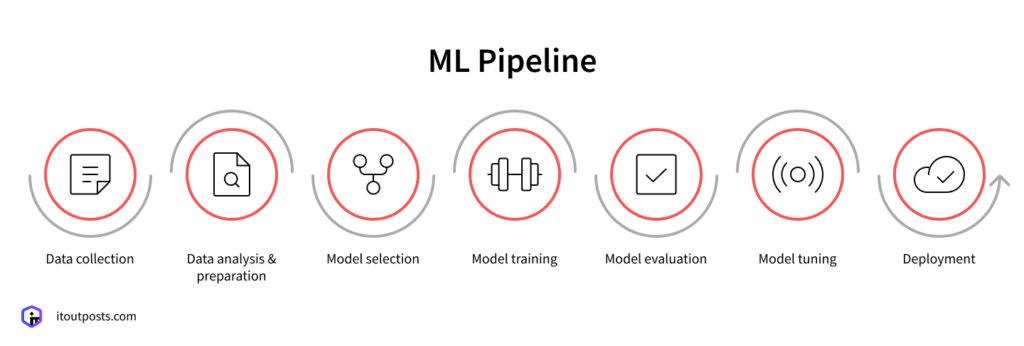

AI flips the script and starts way earlier — with data. Before any coding happens, teams should get data ready, clean it up, and label it properly.

There’s a saying that perfectly applies to the AI field:

“Garbage in, garbage out.”

This basically means your AI is only as good as the data you feed it. Feed it incorrect or biased data, and it will make incorrect or biased decisions. No amount of clever coding can fix bad training data.

Next comes experimentation. Instead of only writing code, AI creators try different model architectures and training approaches. Each experiment consumes much computing power as the model learns from data.

Even testing works differently. Conventional testing aims to check if features work as they should. AI models need to prove they can deal with real-world data and make accurate predictions. A model might pass all technical tests but fail to meet business accuracy requirements.

Finally, AI models require retraining as data trends shift.

That’s why MLOps pipelines look quite different from traditional DevOps ones.

ML’s Resource Appetite

Traditional software projects tend to be more predictable in their resource needs compared to ML models. You can approximately estimate server needs based on users and features. Even during peak loads like Black Friday sales, you can plan ahead based on previous traffic patterns and expected user behavior.

ML models, on the other hand, are resource-hungry since they process massive amounts of data during training (this may take from weeks to months), which can lead to unpredictably huge cloud bills.

Data scientists might need to retrain models as new data arrives. An experiment might need to run longer than expected. What’s more, companies often play it too safe, overprovisioning cloud resources just in case they run short.

We don’t claim that ML budgets can’t be optimized — they absolutely can with the right approach. That’s why you need special strategies that are different from regular software cost optimization.

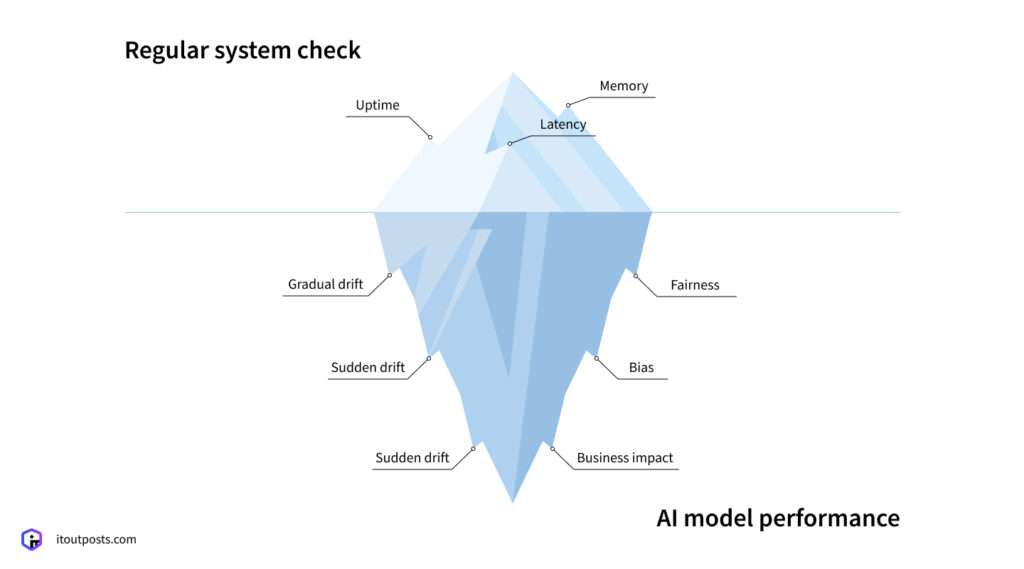

AI Needs Extra Eyes

DevOps monitoring focuses on system health: are servers running, is the app responding quickly, are there any crashes? Regular software either works or it doesn’t (or it’s on the verge of breaking down). It doesn’t degrade in the way ML models do. Thus, the basic system monitoring we use in DevOps can’t catch ML-specific issues.

ML models exist in a gray area where they can technically work perfectly while slowly becoming less accurate. Here are some of the main aspects that need continuous monitoring beyond traditional software metrics:

- Data drift. Are the new incoming data patterns different from what the model was trained on? For example, in e-commerce, a model trained on winter shopping patterns might become less accurate during summer. This is a gradual concept drift. Plus, sometimes, the world can change in a big way overnight, like during a pandemic, which can make AI models suddenly inaccurate or even ineffective. This is a sudden concept drift.

- Prediction drift. Is the pattern of answers the model gives changing over time? If a loan approval model suddenly starts approving many more or fewer applications than usual, there could be an issue.

- Bias and fairness. A model might score a 95% overall accuracy, but that could be hiding the fact that it works well for one group while failing for another. Plus, historical bias can sneakily infiltrate models during retraining.

- Business impact metrics. How are the decisions made by the model affecting actual business results? A recommendation system could be running fine, but if it’s not bringing in sales anymore, it’s time to take a closer look.

We’ve only scratched the surface of what it takes to keep AI systems working well in the real world. The field of ML monitoring is still young and changing fast. New types of problems are being discovered as companies roll out more AI systems, and new monitoring techniques are being developed to catch these issues.

The Difference Between MLOps and DevOps

While regular software is like a well-oiled machine that keeps doing the same thing reliably, AI systems are more like living creatures that need constant care and feeding to stay in good shape. And this is exactly what gave rise to MLOps.

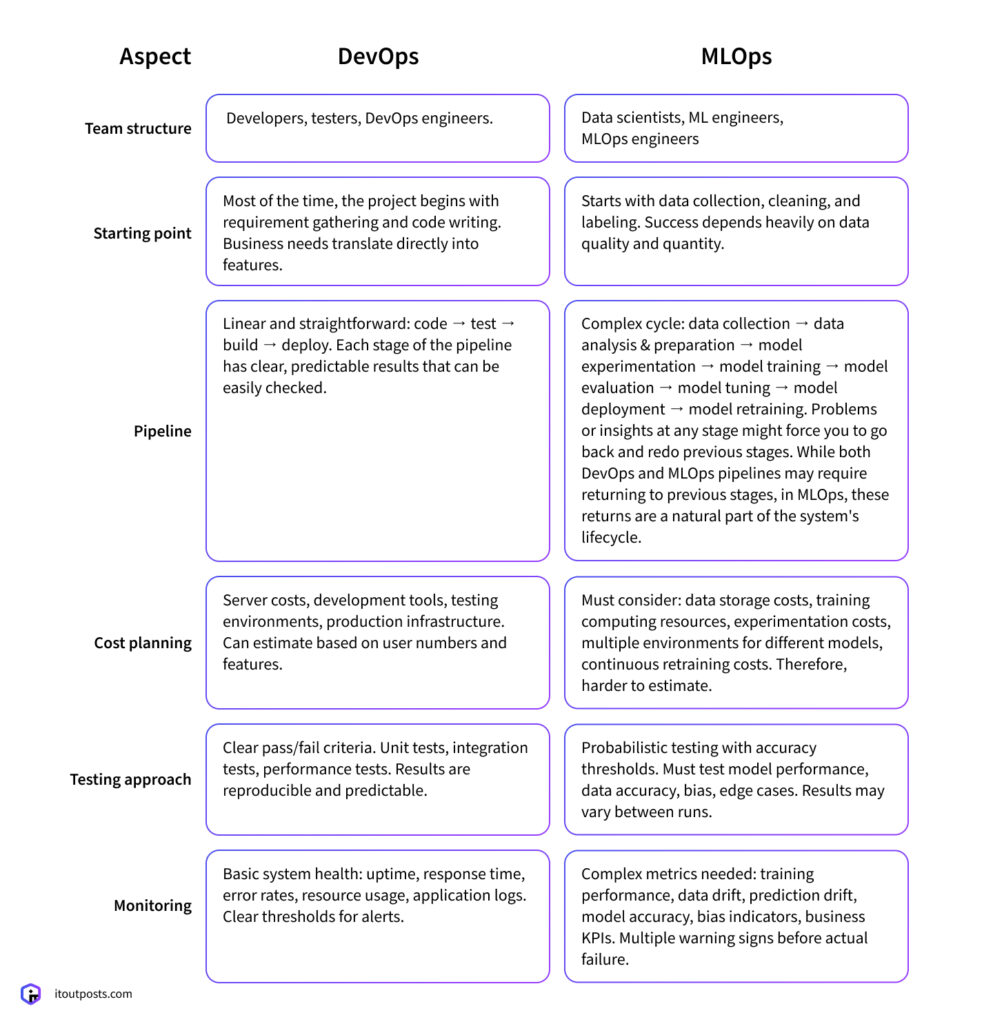

Based on what we’ve discussed above, here’s a comparison table for MLOps vs. DevOps:

The Future Is MLOps…

…and here are two big reasons: AI continues to reshape every industry, while ML models are way too complex not to give them the attention they deserve.

Without this attention, you risk facing a grim reality:

- Your data scientists get bogged down with manual retraining processes while competitors move faster with automated pipelines.

- Training costs can become unpredictable and often sky-high due to poor resource management.

- Models drift off course and get less accurate over time without a solid way to catch any issues.

- And that’s just the tip of the iceberg.

MLOps is still a relatively new concept, and organizations may not understand how to approach it. What often happens? Well, from what we see, data scientists and ML engineers frequently end up doing tasks far beyond their expertise — trying to be ops experts.

But AI creators shouldn’t be deployers, mostly due to the high cost of ML expertise. As of November 2024, ML engineers cost around $162,217. Their salary is more than double the national average salary of $59,428 for all jobs in the US.

When data scientists and ML engineers have to split their time between building models and managing operations, the productivity in their core activities is lower.

Most importantly, organizations pay premium rates for the tasks they may not be as good at compared to dedicated MLOps professionals. And this just reduces the return on their AI investment.

Need a Dedicated MLOps Team? IT Outposts Has Your Back

IT Outposts is here to help you adopt an MLOps mindset.

We offer managed MLOps services so your AI creators can do what they do best — create. Our team knows how to speed up the time it takes to launch your AI product.

Contact us and let IT Outposts be your partner in building a strong MLOps foundation and making sure your AI doesn’t just work but thrives and scales.

FAQ

Is MLOps better than DevOps?

It’s not about better or worse — it’s more about having different needs. DevOps is great for traditional software where code behaves consistently once deployed. MLOps exists because AI systems require ongoing retraining.

So, while DevOps focuses on code deployment and system stability, MLOps adds essential layers for managing data quality, model accuracy, and continuous learning. Each has its role in today’s software development scene.

I am an IT professional with over 10 years of experience. My career trajectory is closely tied to strategic business development, sales expansion, and the structuring of marketing strategies.

Throughout my journey, I have successfully executed and applied numerous strategic approaches that have driven business growth and fortified competitive positions. An integral part of my experience lies in effective business process management, which, in turn, facilitated the adept coordination of cross-functional teams and the attainment of remarkable outcomes.

I take pride in my contributions to the IT sector’s advancement and look forward to exchanging experiences and ideas with professionals who share my passion for innovation and success.